Incident Response Optimization

A Seriously Smart Upgrade.

Prevent, detect and resolve incidents faster than ever before. No matter what your data stack throws at you, your data quality will reach new levels of performance.

No More Over Reacting

Sifflet takes you from reactive to proactive, with real-time detection and alerts that help you to catch data disruptions, before they happen. Watch your mean time to detection fall rapidly. On even the most complex data stacks.

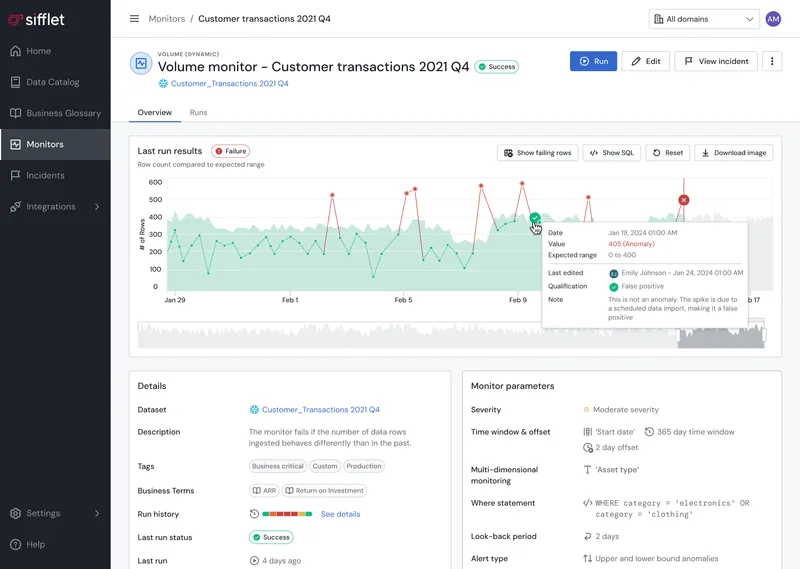

- Advanced capabilities such as multidimensional monitoring help you seize complex data quality issues, even before breaks

- ML-based monitors shield your most business-critical data, so essential KPIs are protected and you get notified before there is business impact

- OOTB and customizable monitors give you comprehensive, end-to-end coverage and AI helps them get smarter as they go, reducing your reactivity even more.

Resolutions in Record Time

Get to the root cause of incidents and resolve them in record time.

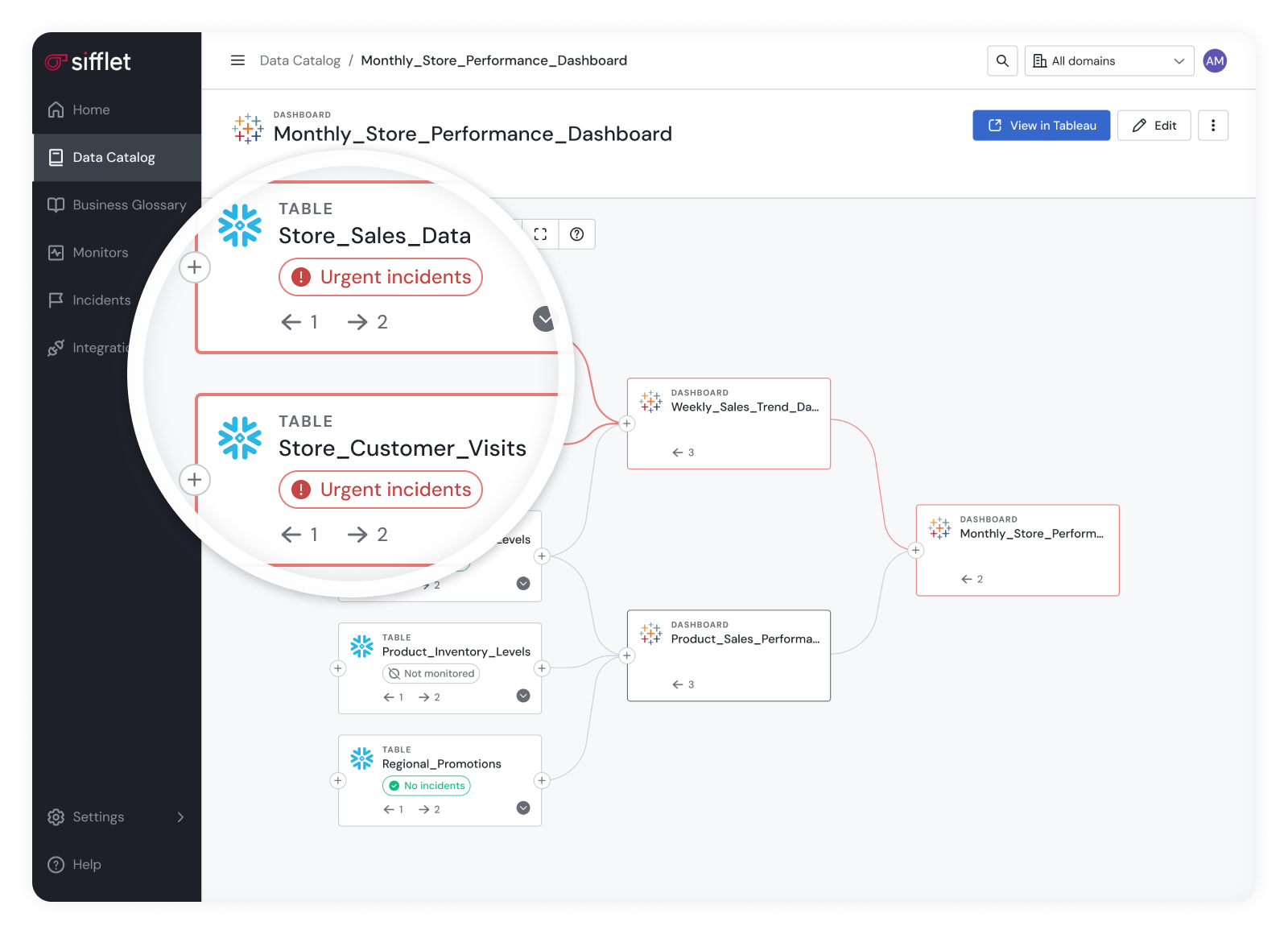

- Quickly understand the scope and impact of an incident thanks to detailed system visibility

- Trace data flow through your system, identify the start point of issues, and pinpoint downstream dependencies to enable a seamless experience for business users, all thanks to data lineage

- Halt the propagation of data quality anomalies with Sifflet’s Flow Stopper

Frequently asked questions

How did Carrefour improve data reliability across its global operations?

Carrefour enhanced data reliability by adopting Sifflet's AI-augmented data observability platform. This allowed them to implement over 3,000 automated data quality checks and monitor more than 1,000 core business tables, ensuring consistent and trustworthy data across teams.

What are the key features to look for in a data observability platform?

When evaluating an observability platform, look for strong data lineage tracking, real-time metrics collection, anomaly detection capabilities, and broad integrations across your data stack. Features like field-level lineage, ease of setup, and user-friendly dashboards can make a big difference too. At Sifflet, we believe observability should empower both technical and business users with the context they need to trust and act on data.

How does a unified data observability platform like Sifflet help reduce chaos in data management?

Great question! At Sifflet, we believe that bringing together data cataloging, data quality monitoring, and lineage tracking into a single observability platform helps reduce Data Entropy and streamline how teams manage and trust their data. By centralizing these capabilities, users can quickly discover assets, monitor their health, and troubleshoot issues without switching tools.

How does Sifflet ensure data security within its data observability platform?

At Sifflet, data security is built into the foundation of our data observability platform. We follow three core principles: least privilege, no storage, and single tenancy. This means we only use read-only access, never store your data, and isolate each customer’s environment to prevent cross-tenant access.

What should I look for in terms of integrations when choosing a data observability platform?

Great question! When evaluating a data observability platform, it's important to check how well it integrates with your existing data stack. The more integrations it supports, the more visibility you’ll have across your pipelines. This is key to achieving comprehensive data pipeline monitoring and ensuring smooth observability across your entire data ecosystem.

How did Sifflet help Meero reduce the time spent on troubleshooting data issues?

Sifflet significantly cut down Meero's troubleshooting time by enabling faster root cause analysis. With real-time alerts and automated anomaly detection, the data team was able to identify and resolve issues in minutes instead of hours, saving up to 50% of their time.

What is “data-quality-as-code”?

Data-quality-as-code (DQaC) allows you to programmatically define and enforce data quality rules using code. This ensures consistency, scalability, and better integration with CI/CD pipelines. Read more here to find out how to leverage it within Sifflet

What makes traditional data monitoring insufficient for modern retail operations?

Traditional monitoring often relies on batch processing, leading to delays in inventory updates. It also struggles with data silos, lacks robust data quality monitoring, and is mostly reactive. In contrast, modern observability tools provide real-time insights, dynamic thresholding, and predictive analytics monitoring to keep up with fast-paced retail environments.

-p-500.png)