Cloud migration monitoring

Mitigate disruption and risks

Optimize the management of data assets during each stage of a cloud migration.

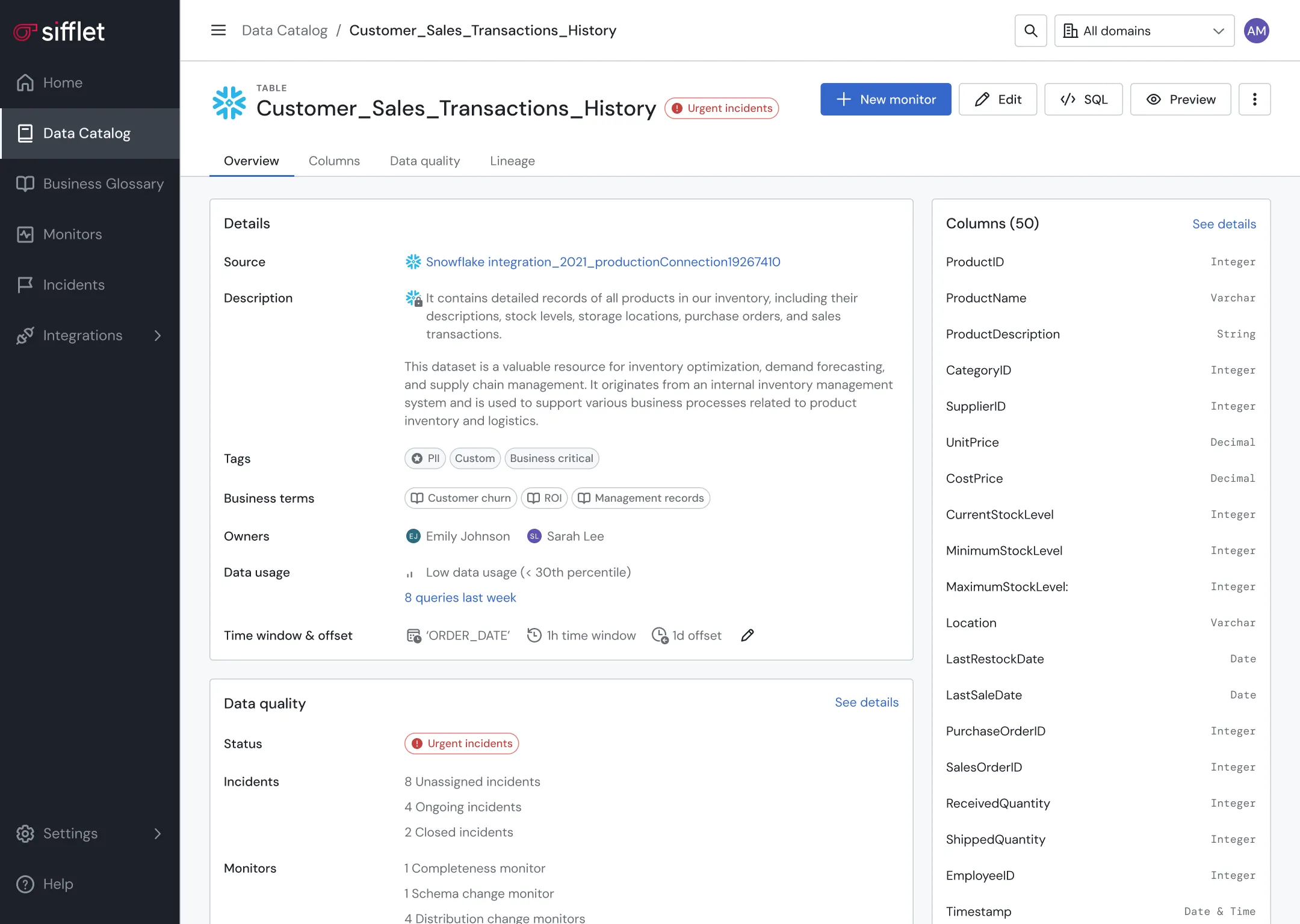

Before migration

- Go through an inventory of what needs to be migrated using the Data Catalog

- Identify the most critical assets to prioritize migration efforts based on actual asset usage

- Leverage lineage to identify downstream impact of the migration in order to plan accordingly

.webp)

During migration

- Use the Data Catalog to confirm all the data was backed up appropriately

- Ensure the new environment matches the incumbent via dedicated monitors

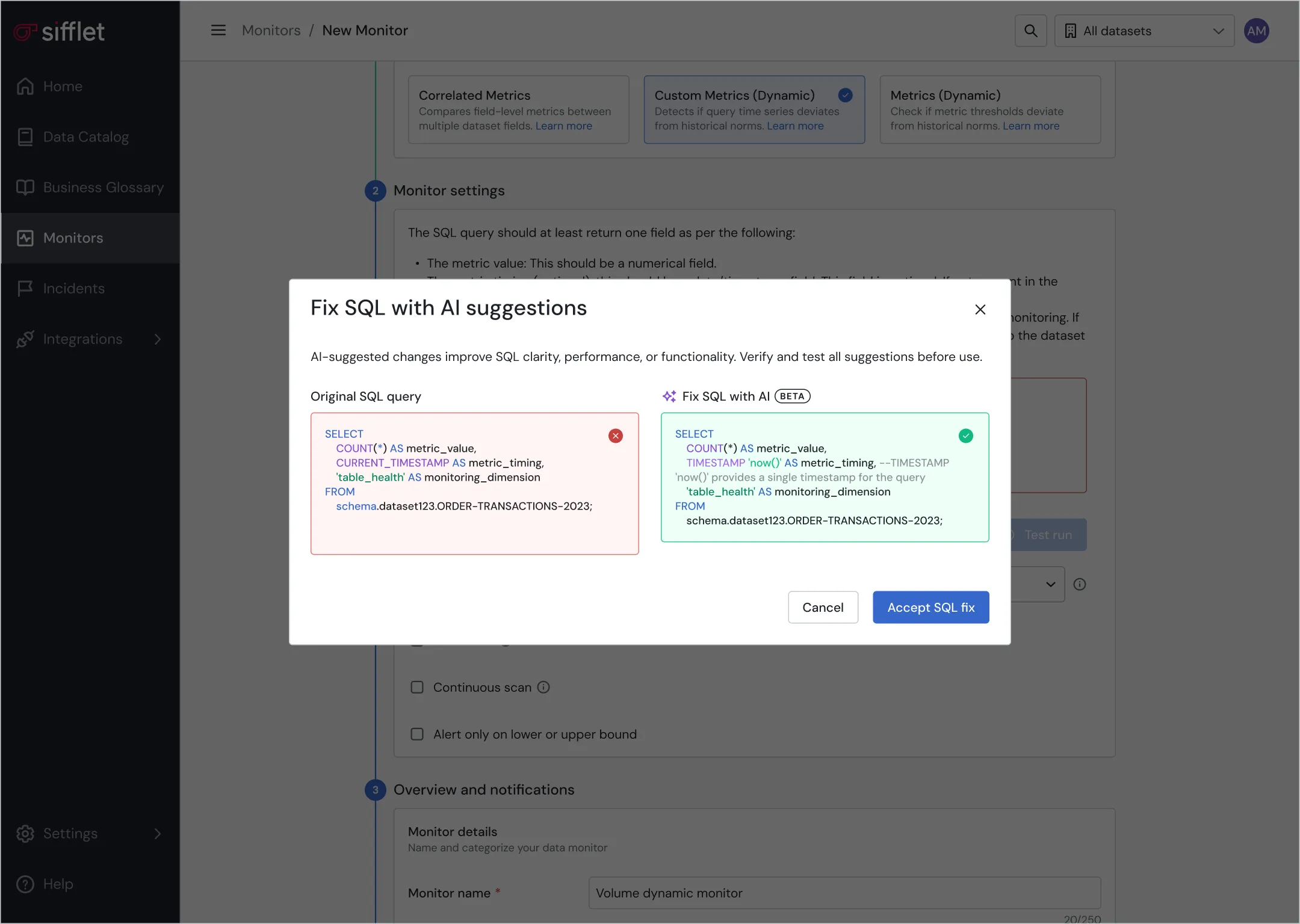

After migration

- Swiftly document and classify new pipelines thanks to Sifflet AI Assistant

- Define data ownership to improve accountability and simplify maintenance of new data pipelines

- Monitor new pipelines to ensure the robustness of data foundations over time

- Leverage lineage to better understand newly built data flows

Frequently asked questions

How can data observability help with SLA compliance and incident management?

Data observability plays a huge role in SLA compliance by enabling real-time alerts and proactive monitoring of data freshness, completeness, and accuracy. When issues occur, observability tools help teams quickly perform root cause analysis and understand downstream impacts, speeding up incident response and reducing downtime. This makes it easier to meet service level agreements and maintain stakeholder trust.

Why is stakeholder trust in data so important, and how can we protect it?

Stakeholder trust is crucial because inconsistent or unreliable data can lead to poor decisions and reduced adoption of data-driven practices. You can protect this trust with strong data quality monitoring, real-time metrics, and consistent reporting. Data observability tools help by alerting teams to issues before they impact dashboards or reports, ensuring transparency and reliability.

What kind of data quality monitoring does Sifflet offer when used with dbt?

When paired with dbt, Sifflet provides robust data quality monitoring by combining dbt test insights with ML-based rules and UI-defined validations. This helps you close test coverage gaps and maintain high data quality throughout your data pipelines.

What role does Sifflet’s Data Catalog play in data governance?

Sifflet’s Data Catalog supports data governance by surfacing labels and tags, enabling classification of data assets, and linking business glossary terms for standardized definitions. This structured approach helps maintain compliance, manage costs, and ensure sensitive data is handled responsibly.

Why did jobvalley choose Sifflet over other data catalog vendors?

After evaluating several data catalog vendors, jobvalley selected Sifflet because of its comprehensive features that addressed both data discovery and data quality monitoring. The platform’s ability to streamline onboarding and support real-time metrics made it the ideal choice for their growing data team.

Can data lineage help with regulatory compliance such as GDPR?

Absolutely. Data lineage supports data governance by mapping data flows and access rights, which is essential for compliance with regulations like GDPR. Features like automated PII propagation help teams monitor sensitive data and enforce security observability best practices.

What exactly is the modern data stack, and why is it so popular now?

The modern data stack is a collection of cloud-native tools that help organizations transform raw data into actionable insights. It's popular because it simplifies data infrastructure, supports scalability, and enables faster, more accessible analytics across teams. With tools like Snowflake, dbt, and Airflow, teams can build robust pipelines while maintaining visibility through data observability platforms like Sifflet.

Why should companies invest in data pipeline monitoring?

Data pipeline monitoring helps teams stay on top of ingestion latency, schema changes, and unexpected drops in data freshness. Without it, issues can go unnoticed and lead to broken dashboards or faulty decisions. With tools like Sifflet, you can set up real-time alerts and reduce downtime through proactive monitoring.

-p-500.png)