Analytics Trust and Reliability

Shared Understanding. Ultimate Confidence. At Scale.

When everyone knows your data is systematically validated for quality, understands where it comes from and how it's transformed, and is aligned on freshness and SLAs, what’s not to trust?

Always Fresh. Always Validated.

No more explaining data discrepancies to the C-suite. Thanks to automatic and systematic validation, Sifflet ensures your data is always fresh and meets your quality requirements. Stakeholders know when data might be stale or interrupted, so they can make decisions with timely, accurate data.

- Automatically detect schema changes, null values, duplicates, or unexpected patterns that could comprise analysis.

- Set and monitor service-level agreements (SLAs) for critical data assets.

- Track when data was last updated and whether it meets freshness requirements

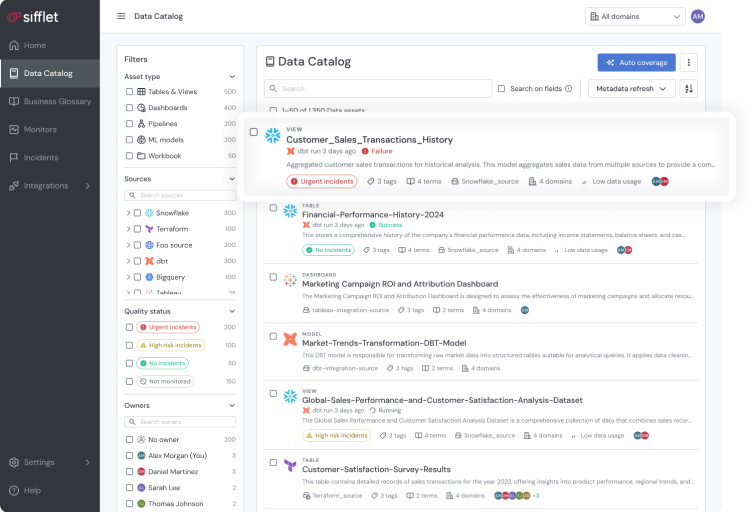

Understand Your Data, Inside and Out

Give data analysts and business users ultimate clarity. Sifflet helps teams understand their data across its whole lifecycle, and gives full context like business definitions, known limitations, and update frequencies, so everyone works from the same assumptions.

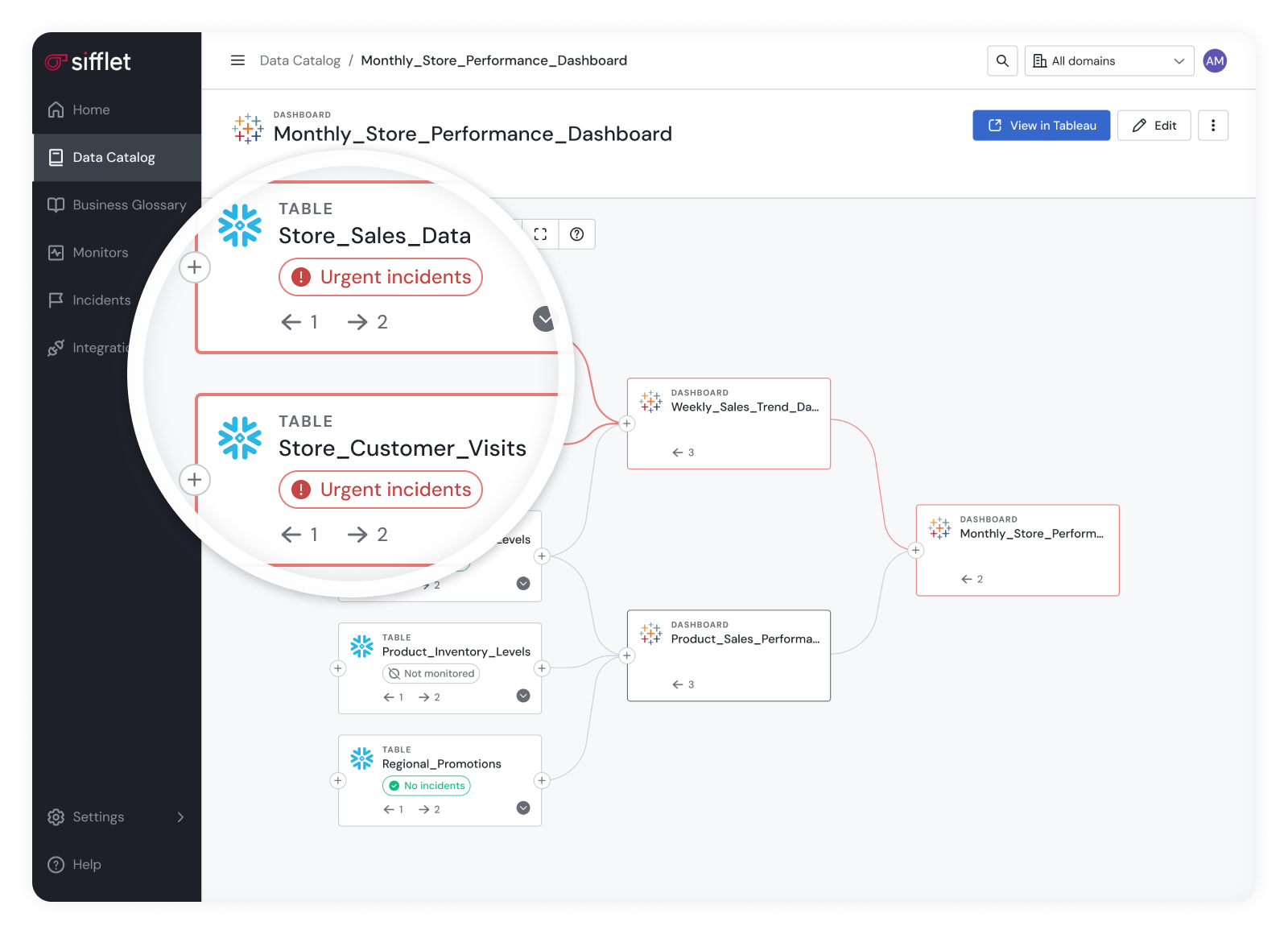

- Create transparency by helping users understand data pipelines, so they always know where data comes from and how it’s transformed.

- Develop shared understanding in data that prevents misinterpretation and builds confidence in analytics outputs.

- Quickly assess which downstream reports and dashboards are affected

Frequently asked questions

What is the difference between data monitoring and data observability?

Great question! Data monitoring is like your car's dashboard—it alerts you when something goes wrong, like a failed pipeline or a missing dataset. Data observability, on the other hand, is like being the driver. It gives you a full understanding of how your data behaves, where it comes from, and how issues impact downstream systems. At Sifflet, we believe in going beyond alerts to deliver true data observability across your entire stack.

Why is Sifflet excited about integrating MCP with its observability tools?

We're excited because MCP allows us to build intelligent, context-aware agents that go beyond alerts. With MCP, our observability tools can now support real-time metrics analysis, dynamic thresholding, and even automated remediation. It’s a huge step forward in delivering reliable and scalable data observability.

Who benefits from implementing a data observability platform like Sifflet?

Honestly, anyone who relies on data to make decisions—so pretty much everyone. Data engineers, BI teams, data scientists, RevOps, finance, and even executives all benefit. With Sifflet, teams get proactive alerts, root cause analysis, and cross-functional visibility. That means fewer surprises, faster resolutions, and more trust in the data that powers your business.

How does Sifflet support AI-ready data for enterprises?

Sifflet is designed to ensure data quality and reliability, which are critical for AI initiatives. Our observability platform includes features like data freshness checks, anomaly detection, and root cause analysis, making it easier for teams to maintain high standards and trust in their analytics and AI models.

What’s the best way to prevent bad data from impacting our business decisions?

Preventing bad data starts with proactive data quality monitoring. That includes data profiling, defining clear KPIs, assigning ownership, and using observability tools that provide real-time metrics and alerts. Integrating data lineage tracking also helps you quickly identify where issues originate in your data pipelines.

Can open-source ETL tools support data observability needs?

Yes, many open-source ETL tools like Airbyte or Talend can be extended to support observability features. By integrating them with a cloud data observability platform like Sifflet, you can add layers of telemetry instrumentation, anomaly detection, and alerting. This ensures your open-source stack remains robust, reliable, and ready for scale.

How does Sifflet support data teams in improving data pipeline monitoring?

Sifflet’s observability platform offers powerful features like anomaly detection, pipeline error alerting, and data freshness checks. We help teams stay on top of their data workflows and ensure SLA compliance with minimal friction. Come chat with us at Booth Y640 to learn more!

What can I expect from Sifflet at Big Data Paris 2024?

We're so excited to welcome you at Booth #D15 on October 15 and 16! You’ll get to experience live demos of our latest data observability features, hear real client stories like Saint-Gobain’s, and explore how Sifflet helps improve data reliability and streamline data pipeline monitoring.

-p-500.png)