Cost Observability

Cost-efficient data pipelines

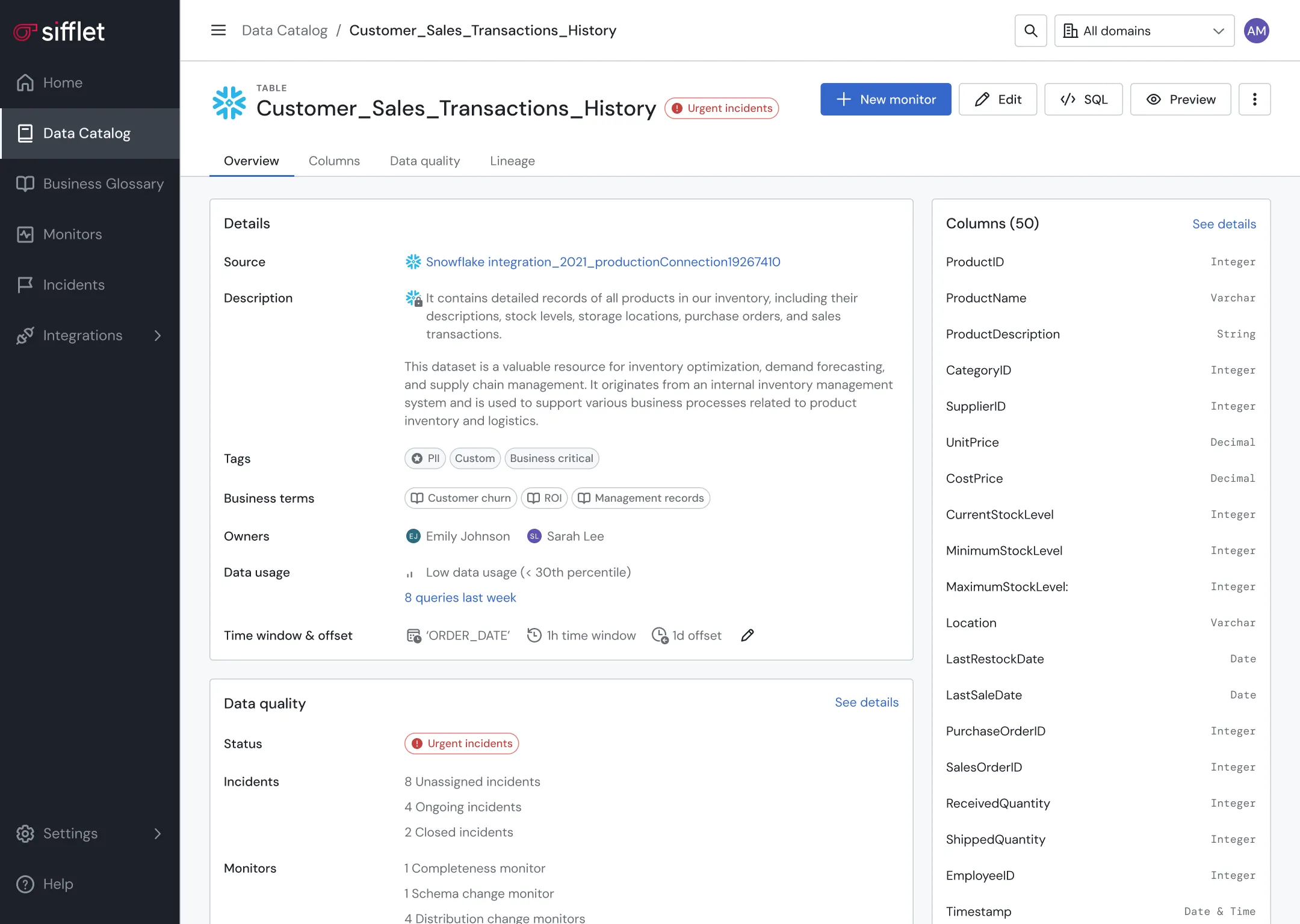

Pinpoint cost inefficiencies and anomalies thanks to full-stack data observability.

Data asset optimization

- Leverage lineage and Data Catalog to pinpoint underutilized assets

- Get alerted on unexpected behaviors in data consumption patterns

Proactive data pipeline management

Proactively prevent pipelines from running in case a data quality anomaly is detected

Frequently asked questions

What is data lineage and why is it important for data observability?

Data lineage is the process of tracing data as it moves from source to destination, including all transformations along the way. It's a critical component of data observability because it helps teams understand dependencies, troubleshoot issues faster, and maintain data reliability across the entire pipeline.

Can I learn about real-world results from Sifflet customers at the event?

Yes, definitely! Companies like Saint-Gobain will be sharing how they’ve used Sifflet for data observability, data lineage tracking, and SLA compliance. It’s a great chance to hear how others are solving real data challenges with our platform.

What is data observability and why is it important for modern data teams?

Data observability is the ability to monitor, understand, and troubleshoot data health across the entire data stack. It's essential for modern data teams because it helps ensure data reliability, improves trust in analytics, and prevents costly issues caused by broken data pipelines or inaccurate dashboards. With the rise of complex infrastructures and real-time data usage, having a strong observability platform in place is no longer optional.

What exactly is the modern data stack, and why is it so popular now?

The modern data stack is a collection of cloud-native tools that help organizations transform raw data into actionable insights. It's popular because it simplifies data infrastructure, supports scalability, and enables faster, more accessible analytics across teams. With tools like Snowflake, dbt, and Airflow, teams can build robust pipelines while maintaining visibility through data observability platforms like Sifflet.

How does the Sifflet and Firebolt integration improve data observability?

Great question! By integrating with Firebolt, Sifflet enhances your data observability by offering real-time metrics, end-to-end lineage, and automated anomaly detection. This means you can monitor your Firebolt data warehouse with precision and catch data quality issues before they impact the business.

Who should be the first hire on a new data team?

If you're just starting out, look for someone with 'Full Data Stack' capabilities, like a Data Analyst with strong SQL and business acumen or a Data Engineer with analytics skills. This person can work closely with other teams to build initial pipelines and help shape your data platform. As your needs evolve, you can grow your team with more specialized roles.

How do Service Level Indicators (SLIs) help improve data product reliability?

SLIs are a fantastic way to measure the health and performance of your data products. By tracking metrics like data freshness, anomaly detection, and real-time alerts, you can ensure your data meets expectations and stays aligned with your team’s SLA compliance goals.

How does the new Custom Metadata feature improve data governance?

With Custom Metadata, you can tag any asset, monitor, or domain in Sifflet using flexible key-value pairs. This makes it easier to organize and route data based on your internal logic, whether it's ownership, SLA compliance, or business unit. It's a big step forward for data governance and helps teams surface high-priority monitors more effectively.

-p-500.png)