Mitigate disruption and risks

Optimize the management of data assets during each stage of a cloud migration.

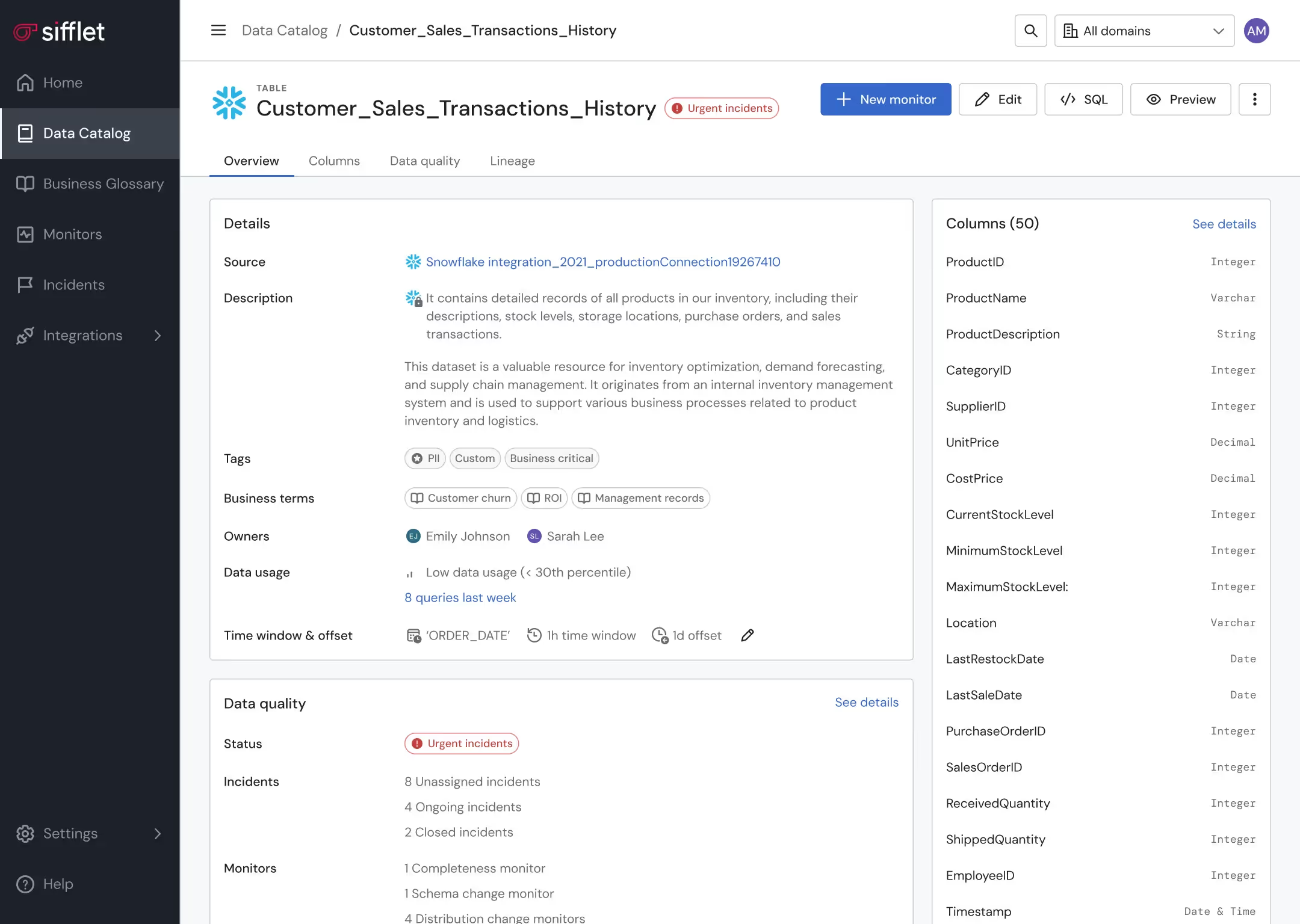

Before migration

- Go through an inventory of what needs to be migrated using the Data Catalog

- Identify the most critical assets to prioritize migration efforts based on actual asset usage

- Leverage lineage to identify downstream impact of the migration in order to plan accordingly

.avif)

During migration

- Use the Data Catalog to confirm all the data was backed up appropriately

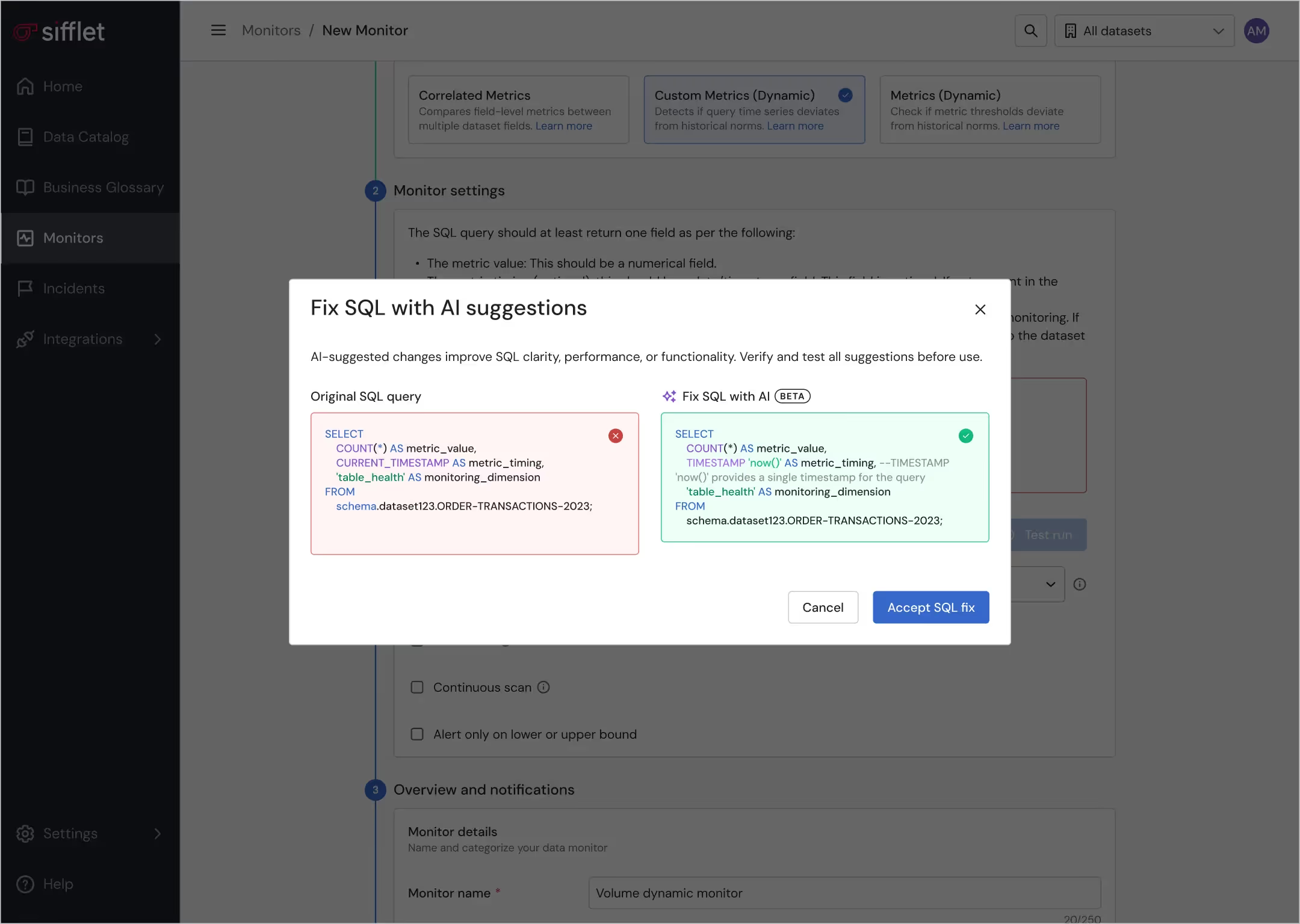

- Ensure the new environment matches the incumbent via dedicated monitors

After migration

- Swiftly document and classify new pipelines thanks to Sifflet AI Assistant

- Define data ownership to improve accountability and simplify maintenance of new data pipelines

- Monitor new pipelines to ensure the robustness of data foundations over time

- Leverage lineage to better understand newly built data flows

Still have a question in mind ?

Contact Us

Frequently asked questions

Why is data observability important during cloud migration?

Great question! Data observability helps you monitor the health and integrity of your data as it moves to the cloud. By using an observability platform, you can track data lineage, detect anomalies, and validate consistency between environments, which reduces the risk of disruptions and broken pipelines.

Can I learn about real-world results from Sifflet customers at the event?

Yes, definitely! Companies like Saint-Gobain will be sharing how they’ve used Sifflet for data observability, data lineage tracking, and SLA compliance. It’s a great chance to hear how others are solving real data challenges with our platform.

Can I monitor my BigQuery data with Sifflet?

Absolutely! Sifflet’s observability tools are fully compatible with Google BigQuery, so you can perform data quality monitoring, data lineage tracking, and anomaly detection right where your data lives.

How did Sifflet support Meero’s incident management and root cause analysis efforts?

Sifflet provided Meero with powerful tools for root cause analysis and incident management. With features like data lineage tracking and automated alerts, the team could quickly trace issues back to their source and take action before they impacted business users.

What kind of integrations does Sifflet offer for data pipeline monitoring?

Sifflet integrates with cloud data warehouses like Snowflake, Redshift, and BigQuery, as well as tools like dbt, Airflow, Kafka, and Tableau. These integrations support comprehensive data pipeline monitoring and ensure observability tools are embedded across your entire stack.

How does data observability support compliance with regulations like GDPR?

Data observability plays a key role in data governance by helping teams maintain accurate documentation, monitor data flows, and quickly detect anomalies. This proactive monitoring ensures that your data stays compliant with regulations like GDPR and HIPAA, reducing the risk of costly fines and audits.

Is Forge able to automatically fix data issues in my pipelines?

Forge doesn’t take action on its own, but it does provide smart, contextual guidance based on past fixes. It helps teams resolve issues faster while keeping you in full control of the resolution process, which is key for maintaining SLA compliance and data quality monitoring.

What’s coming next for dbt integration in Sifflet?

We’re just getting started! Soon, you’ll be able to monitor dbt run performance and resource utilization, define monitors in your dbt YAML files, and use custom metadata even more dynamically. These updates will further enhance your cloud data observability and make your workflows even more efficient.

-p-500.png)