Big Data. %%Big Potential.%%

Sell data products that meet the most demanding standards of data reliability, quality and health.

Identify Opportunities

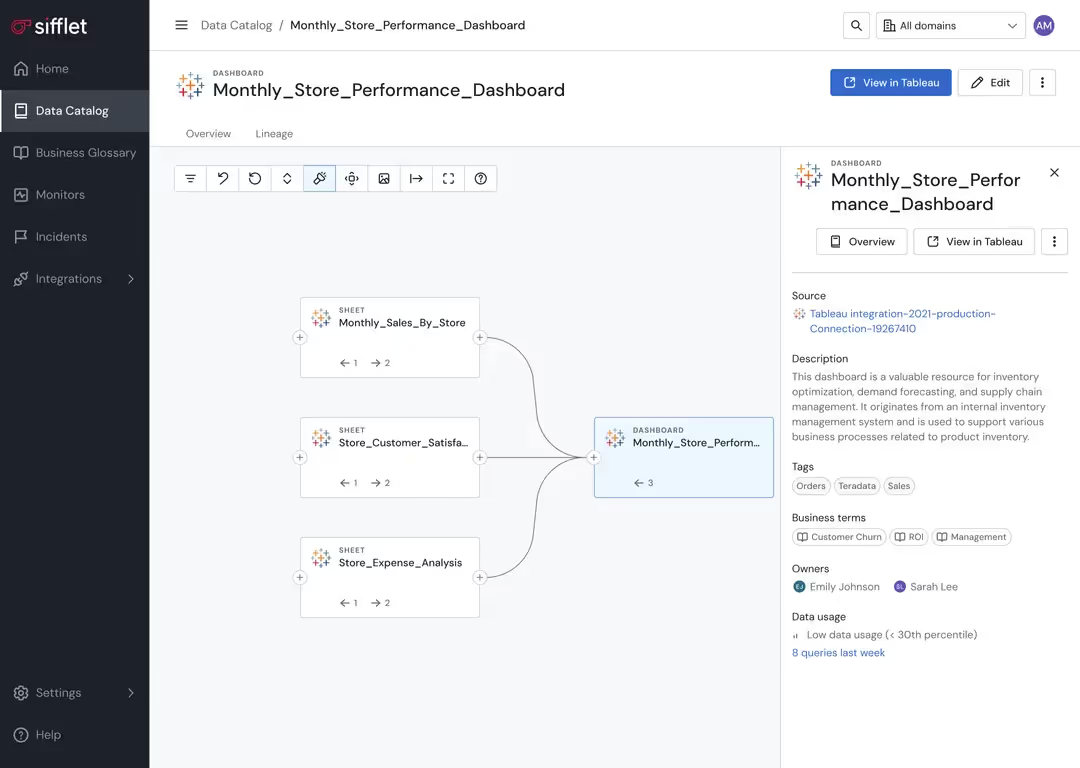

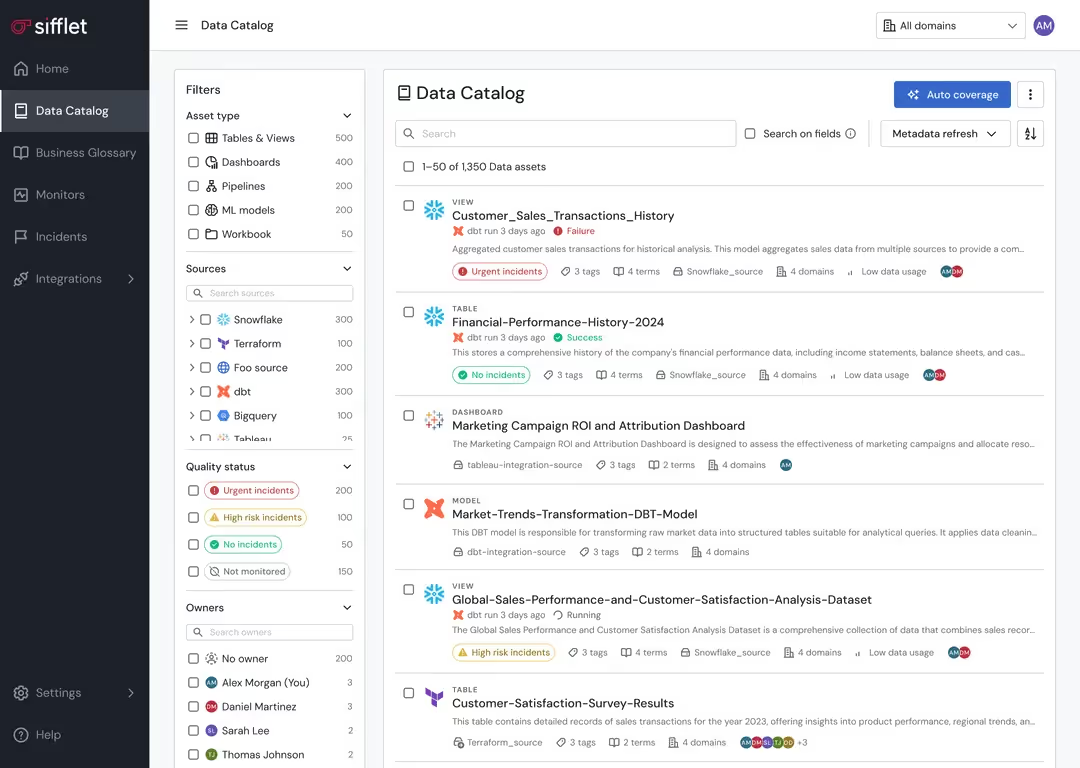

Monetizing data starts with identifying your highest potential data sets. Sifflet can highlight patterns in data usage and quality that suggest monetization potential and help you uncover data combinations that could create value.

- Deep dive into patterns around data usage to identify high-value data sets through usage analytics

- Determine which data assets are most reliable and complete

Ensure Quality and Operational Excellence

It’s not enough to create a data product. Revenue depends on ensuring the highest levels of reliability and quality. Sifflet ensures quality and operational excellence to protect your revenue streams.

- Reduce the cost of maintaining your data products through automated monitoring

- Prevent and detect data quality issues before customers are impacted

- Empower rapid response to issues that could affect data product value

- Streamline data delivery and sharing processes

Still have a question in mind ?

Contact Us

Frequently asked questions

How does SQL Table Tracer handle different SQL dialects?

SQL Table Tracer uses Antlr4 with semantic predicates to support multiple SQL dialects like Snowflake, Redshift, and PostgreSQL. This flexible parsing approach ensures accurate lineage extraction across diverse environments, which is essential for data pipeline monitoring and distributed systems observability.

How can Sifflet help ensure SLA compliance and prevent bad data from affecting business decisions?

Sifflet helps teams stay on top of SLA compliance with proactive data freshness checks, anomaly detection, and incident tracking. Business users can rely on health indicators and lineage views to verify data quality before making decisions, reducing the risk of costly errors due to unreliable data.

What is data distribution deviation and why should I care about it?

Data distribution deviation happens when the distribution of your data changes over time, either gradually or suddenly. This can lead to serious issues like data drift, broken queries, and misleading business metrics. With Sifflet's data observability platform, you can automatically monitor for these deviations and catch problems before they impact your decisions.

Why is data observability a crucial part of the modern data stack?

Data observability is essential because it ensures data reliability across your entire stack. As data pipelines grow more complex, having visibility into data freshness, quality, and lineage helps prevent issues before they impact the business. Tools like Sifflet offer real-time metrics, anomaly detection, and root cause analysis so teams can stay ahead of data problems and maintain trust in their analytics.

Why is data observability essential for building trusted data products?

Great question! Data observability is key because it helps ensure your data is reliable, transparent, and consistent. When you proactively monitor your data with an observability platform like Sifflet, you can catch issues early, maintain trust with your data consumers, and keep your data products running smoothly.

What role does real-time data play in modern analytics pipelines?

Real-time data is becoming a game-changer for analytics, especially in use cases like fraud detection and personalized recommendations. Streaming data monitoring and real-time metrics collection are essential to harness this data effectively, ensuring that insights are both timely and actionable.

Why did jobvalley choose Sifflet over other data catalog vendors?

After evaluating several data catalog vendors, jobvalley selected Sifflet because of its comprehensive features that addressed both data discovery and data quality monitoring. The platform’s ability to streamline onboarding and support real-time metrics made it the ideal choice for their growing data team.

Why is data observability important for data transformation pipelines?

Great question! Data observability is essential for transformation pipelines because it gives teams visibility into data quality, pipeline performance, and transformation accuracy. Without it, errors can go unnoticed and create downstream issues in analytics and reporting. With a solid observability platform, you can detect anomalies, track data freshness, and ensure your transformations are aligned with business goals.

-p-500.png)