Redshift

Integrate Sifflet with Redshift to access end-to-end lineage, monitor assets like Spectrum tables, enrich metadata, and gain insights for optimized data observability.

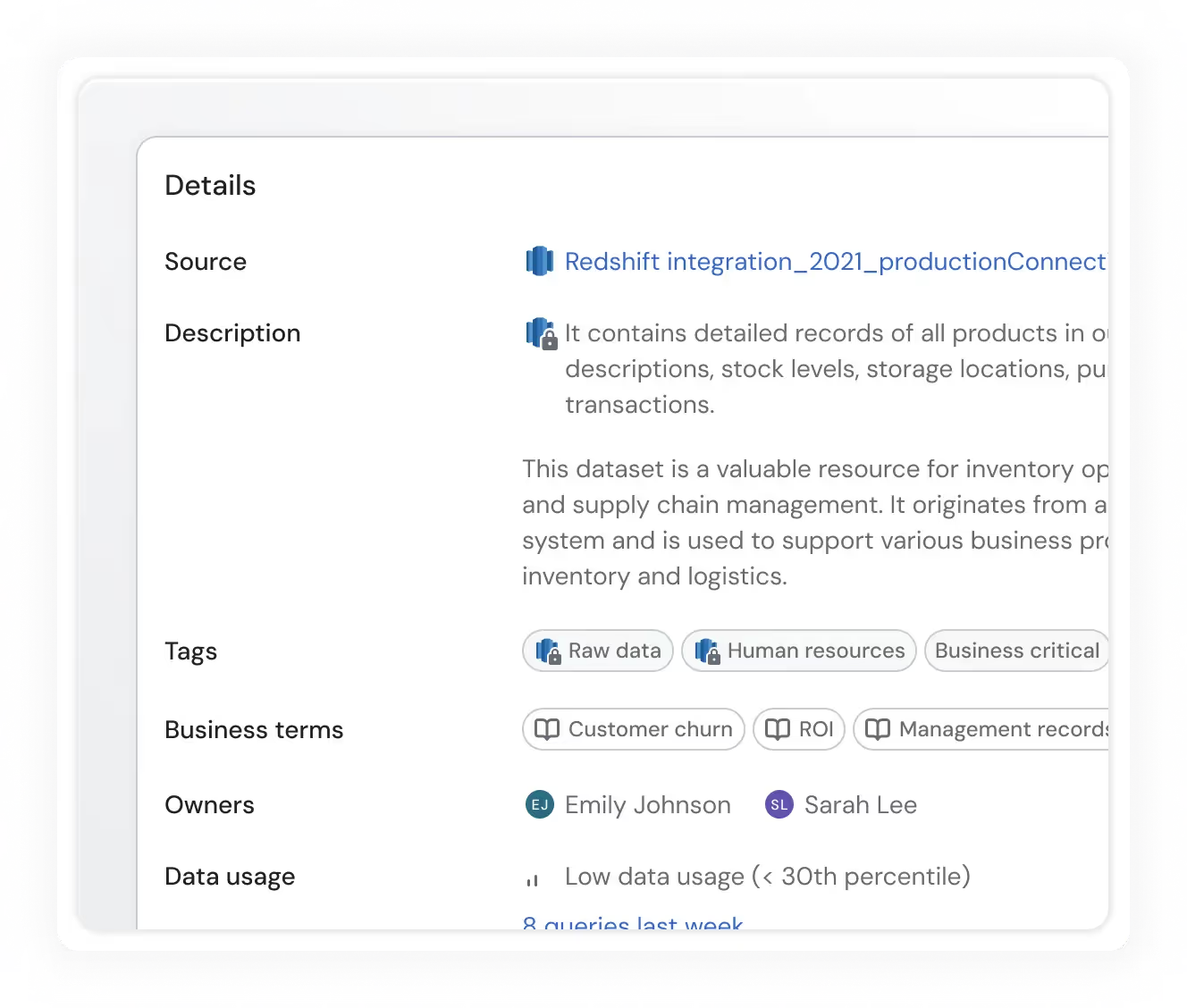

Exhaustive metadata

Sifflet leverages Redshift's internal metadata tables to retrieve information about your assets and enhance it with Sifflet-generated insights.

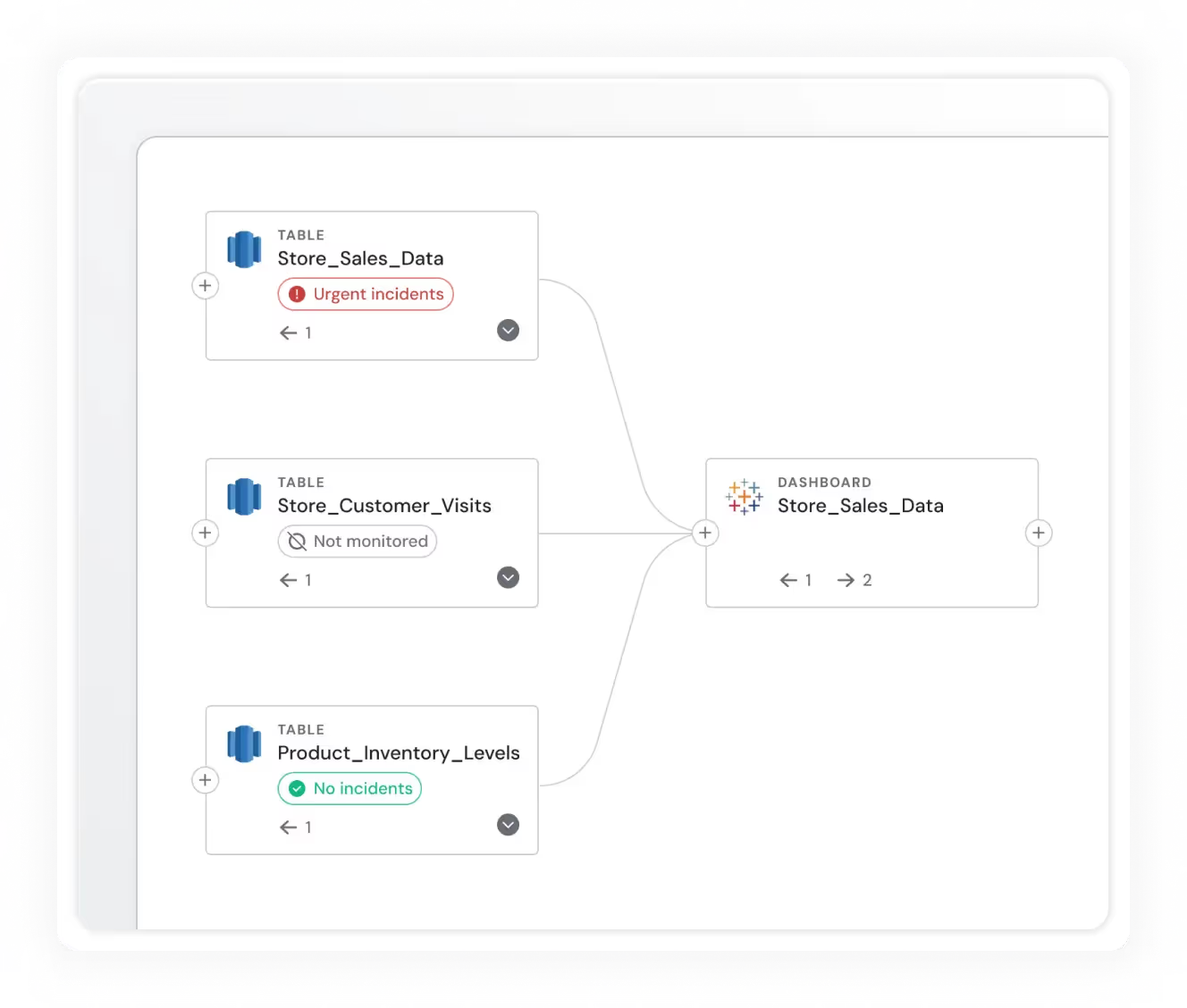

End-to-end lineage

Have a complete understanding of how data flows through your platform via end-to-end lineage for Redshift.

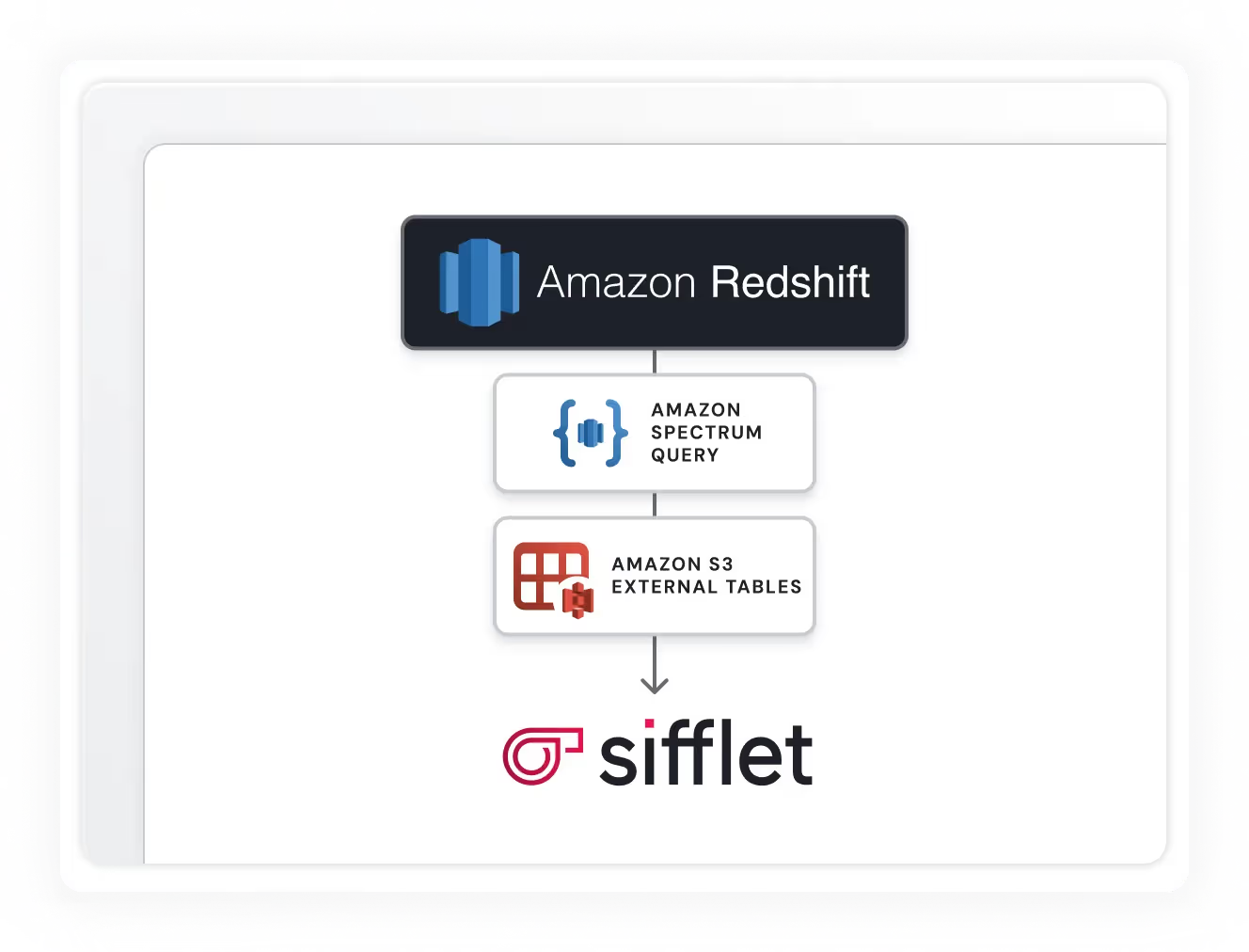

Redshift Spectrum support

Sifflet can monitor external tables via Redshift Spectrum, allowing you to ensure the quality of data stored in other systems like S3.

Still have a question in mind ?

Contact Us

Frequently asked questions

How does Sifflet support data quality monitoring?

Sifflet makes data quality monitoring seamless with its auto-coverage feature. It automatically suggests fields to monitor and applies rules for freshness, uniqueness, and null values. This proactive monitoring helps maintain SLA compliance and keeps your data assets trustworthy and safe to use.

Why is data observability important during the data integration process?

Data observability is key during data integration because it helps detect issues like schema changes or broken APIs early on. Without it, bad data can flow downstream, impacting analytics and decision-making. At Sifflet, we believe observability should start at the source to ensure data reliability across the whole pipeline.

How does Sifflet help with data observability during the CI process?

Sifflet integrates directly with your CI pipelines on platforms like GitHub and GitLab to proactively surface issues before code is merged. By analyzing the impact of dbt model changes and running data quality monitors in testing environments, Sifflet ensures data reliability and minimizes production disruptions.

How do I choose the right organizational structure for my data team?

It depends on your company's size, data maturity, and use cases. Some teams report to engineering or product, while others operate as independent entities reporting to the CEO or CFO. The key is to avoid silos and unclear ownership. A centralized or hybrid structure often works well to promote collaboration and maintain transparency in data pipelines.

What is data observability and why is it important for modern data teams?

Data observability is the ability to monitor and understand the health of your data across the entire data stack. As data pipelines become more complex, having real-time visibility into where and why data issues occur helps teams maintain data reliability and trust. At Sifflet, we believe data observability is essential for proactive data quality monitoring and faster root cause analysis.

What makes Etam’s data strategy resilient in a fast-changing retail landscape?

Etam’s data strategy is built on clear business alignment, strong data quality monitoring, and a focus on delivering ROI across short, mid, and long-term horizons. With the help of an observability platform, they can adapt quickly, maintain data reliability, and support strategic decision-making even in uncertain conditions.

What can I expect to learn from Sifflet’s session on cataloging and monitoring data assets?

Our Head of Product, Martin Zerbib, will walk you through how Sifflet enables data lineage tracking, real-time metrics, and data profiling at scale. You’ll get a sneak peek at our roadmap and see how we’re making data more accessible and reliable for teams of all sizes.

Why is data observability important for data transformation pipelines?

Great question! Data observability is essential for transformation pipelines because it gives teams visibility into data quality, pipeline performance, and transformation accuracy. Without it, errors can go unnoticed and create downstream issues in analytics and reporting. With a solid observability platform, you can detect anomalies, track data freshness, and ensure your transformations are aligned with business goals.

-p-500.png)