Proactive access, quality and control

Empower data teams to detect and address issues proactively by providing them with tools to ensure data availability, usability, integrity, and security.

De-risked data discovery

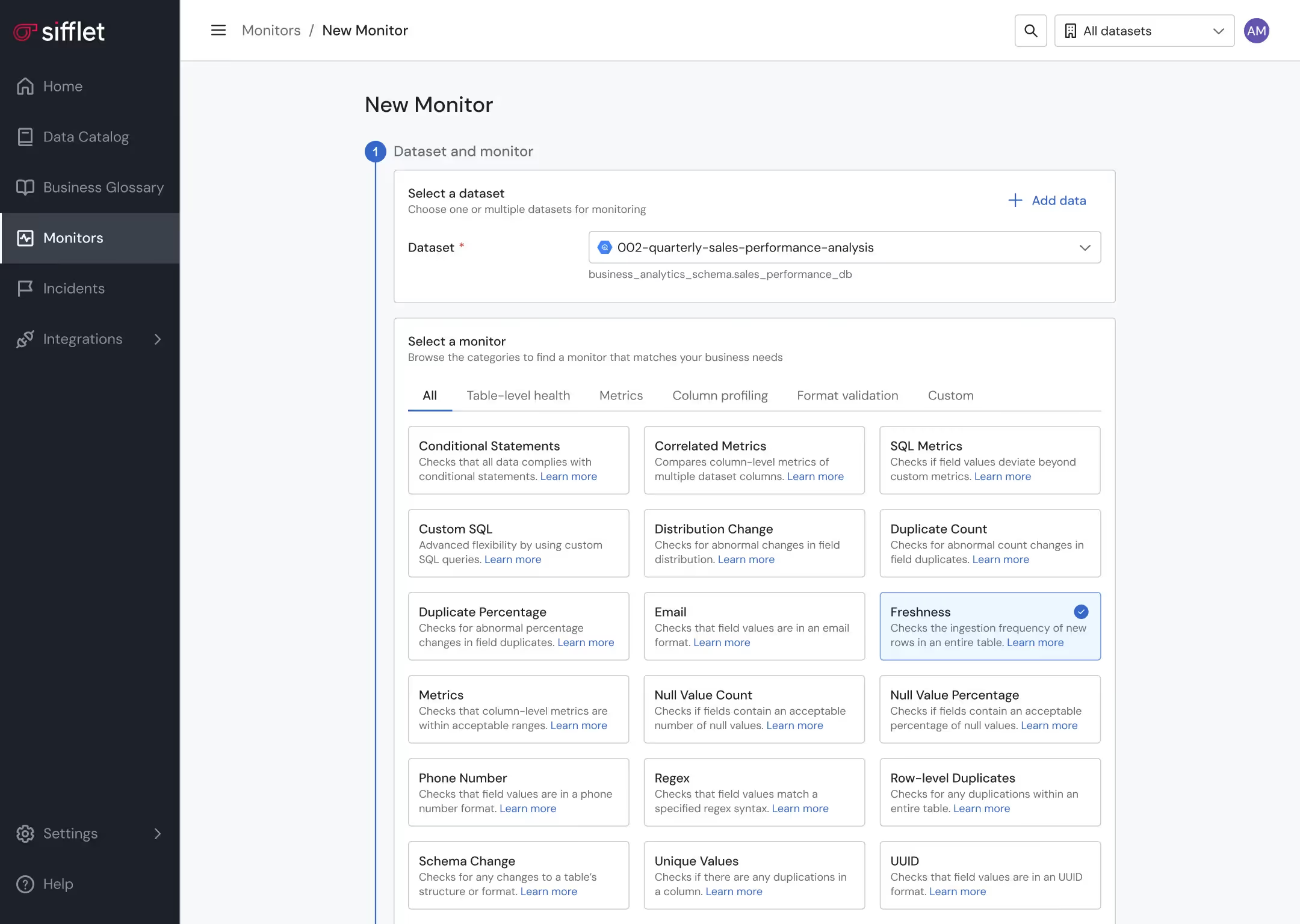

- Ensure proactive data quality thanks to a large library of OOTB monitors and a built-in notification system

- Gain visibility over assets’ documentation and health status on the Data Catalog for safe data discovery

- Establish the official source of truth for key business concepts using the Business Glossary

- Leverage custom tagging to classify assets

Structured data observability platform

- Tailor data visibility for teams by grouping assets in domains that align with the company’s structure

- Define data ownership to improve accountability and smooth collaboration across teams

Secured data management

Safeguard PII data securely through ML-based PII detection

Still have a question in mind ?

Contact Us

Frequently asked questions

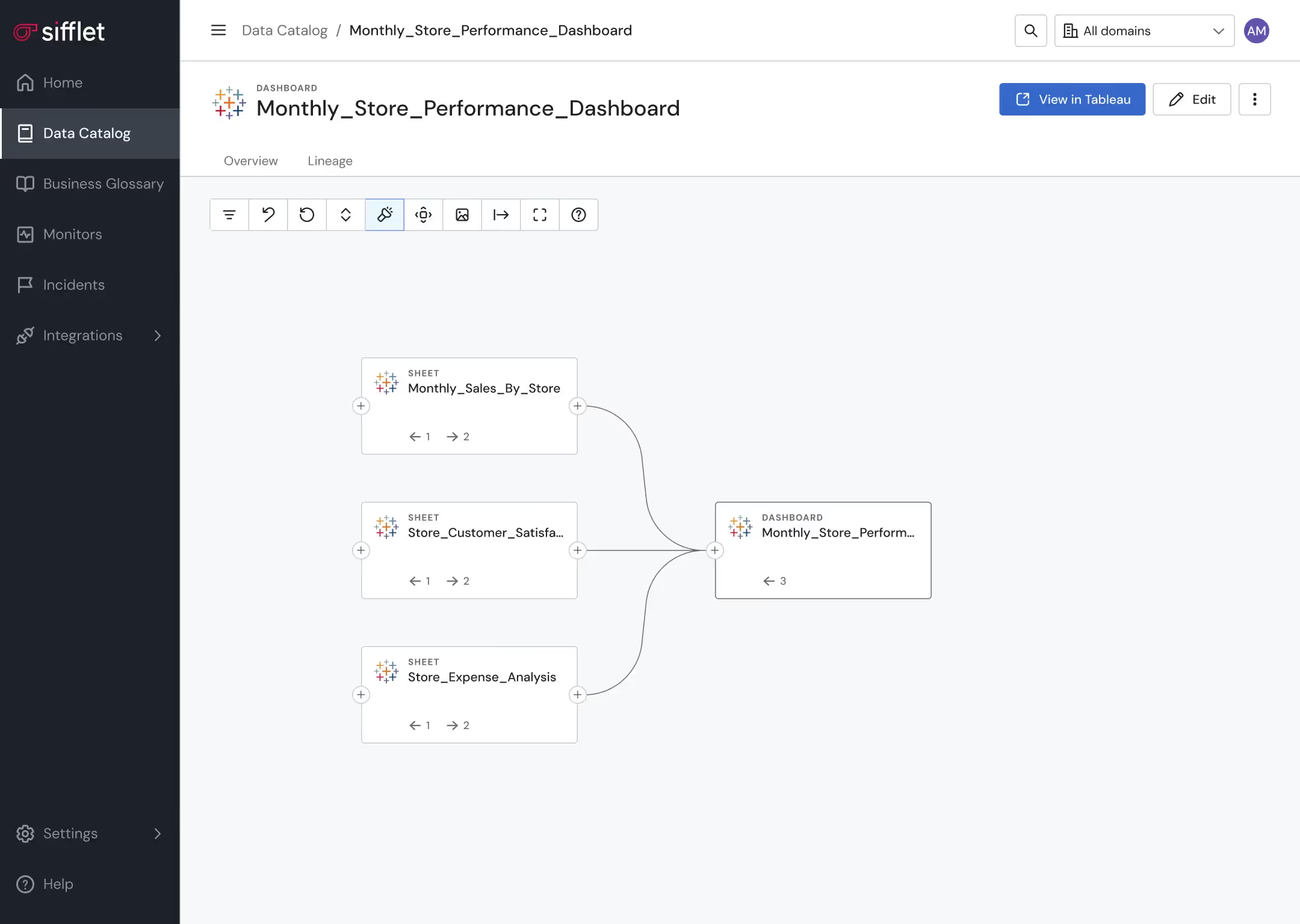

How can I avoid breaking reports and dashboards during migration?

To prevent disruptions, it's essential to use data lineage tracking. This gives you visibility into how data flows through your systems, so you can assess downstream impacts before making changes. It’s a key part of data pipeline monitoring and helps maintain trust in your analytics.

How can data lineage tracking help with root cause analysis?

Data lineage tracking shows how data flows through your systems and how different assets depend on each other. This is incredibly helpful for root cause analysis because it lets you trace issues back to their source quickly. With Sifflet’s lineage capabilities, you can understand both upstream and downstream impacts of a data incident, making it easier to resolve problems and prevent future ones.

How does data observability support better data quality management?

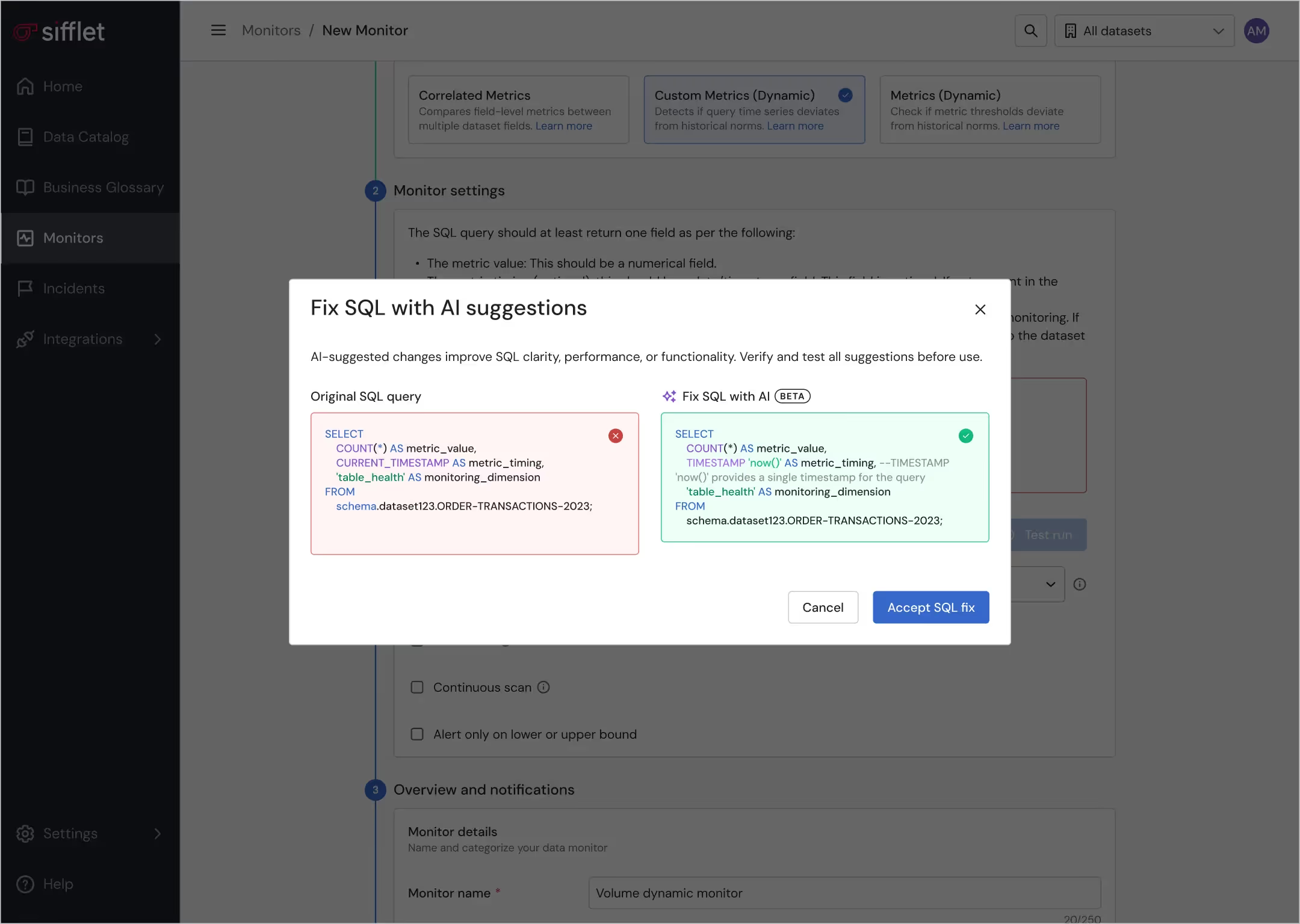

Data observability plays a key role by giving teams real-time visibility into the health of their data pipelines. With observability tools like Sifflet, you can monitor data freshness, detect anomalies, and trace issues back to their root cause. This allows you to catch and fix data quality issues before they impact business decisions, making your data more reliable and your operations more efficient.

Can Sifflet help me trace how data moves through my pipelines?

Absolutely! Sifflet’s data lineage tracking gives you a clear view of how data flows and transforms across your systems. This level of transparency is crucial for root cause analysis and ensuring data governance standards are met.

How can I measure whether my data is trustworthy?

Great question! To measure data quality, you can track key metrics like accuracy, completeness, consistency, relevance, and freshness. These indicators help you evaluate the health of your data and are often part of a broader data observability strategy that ensures your data is reliable and ready for business use.

Can Sifflet integrate with our existing data tools and platforms?

Absolutely! Sifflet is designed to integrate seamlessly with your current stack. We support a wide range of tools including Airflow, Snowflake, AWS Glue, and more. Our goal is to provide complete pipeline orchestration visibility and data freshness checks, all from one intuitive interface.

What is the MCP Server and how does it help with data observability?

The MCP (Model Context Protocol) Server is a new interface that lets you interact with Sifflet directly from your development environment. It's designed to make data observability more seamless by allowing you to query assets, review incidents, and trace data lineage without leaving your IDE or notebook. This helps streamline your workflow and gives you real-time visibility into pipeline health and data quality.

How does Sifflet maintain visual and interaction consistency across its observability platform?

We use a reusable component library based on atomic design principles, along with UX writing guidelines to ensure consistent terminology. This helps users quickly understand telemetry instrumentation, metrics collection, and incident response workflows without needing to relearn interactions across different parts of the platform.

-p-500.png)