Shared Understanding. Ultimate Confidence. At Scale.

When everyone knows your data is systematically validated for quality, understands where it comes from and how it's transformed, and is aligned on freshness and SLAs, what’s not to trust?

Always Fresh. Always Validated.

No more explaining data discrepancies to the C-suite. Thanks to automatic and systematic validation, Sifflet ensures your data is always fresh and meets your quality requirements. Stakeholders know when data might be stale or interrupted, so they can make decisions with timely, accurate data.

- Automatically detect schema changes, null values, duplicates, or unexpected patterns that could comprise analysis.

- Set and monitor service-level agreements (SLAs) for critical data assets.

- Track when data was last updated and whether it meets freshness requirements

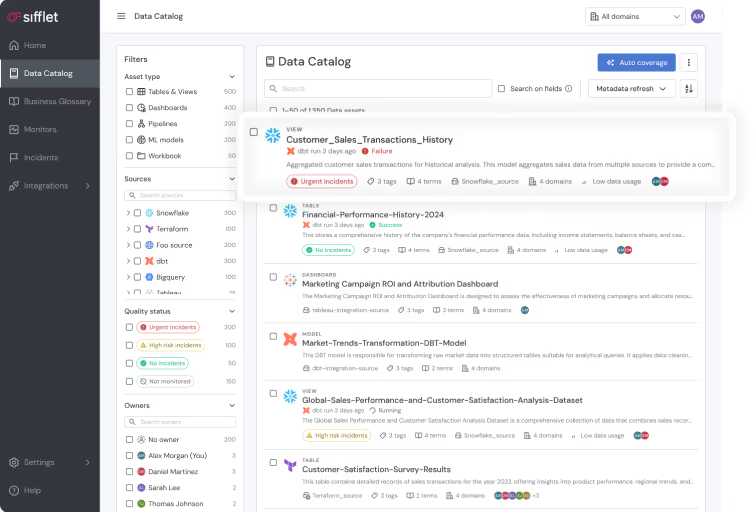

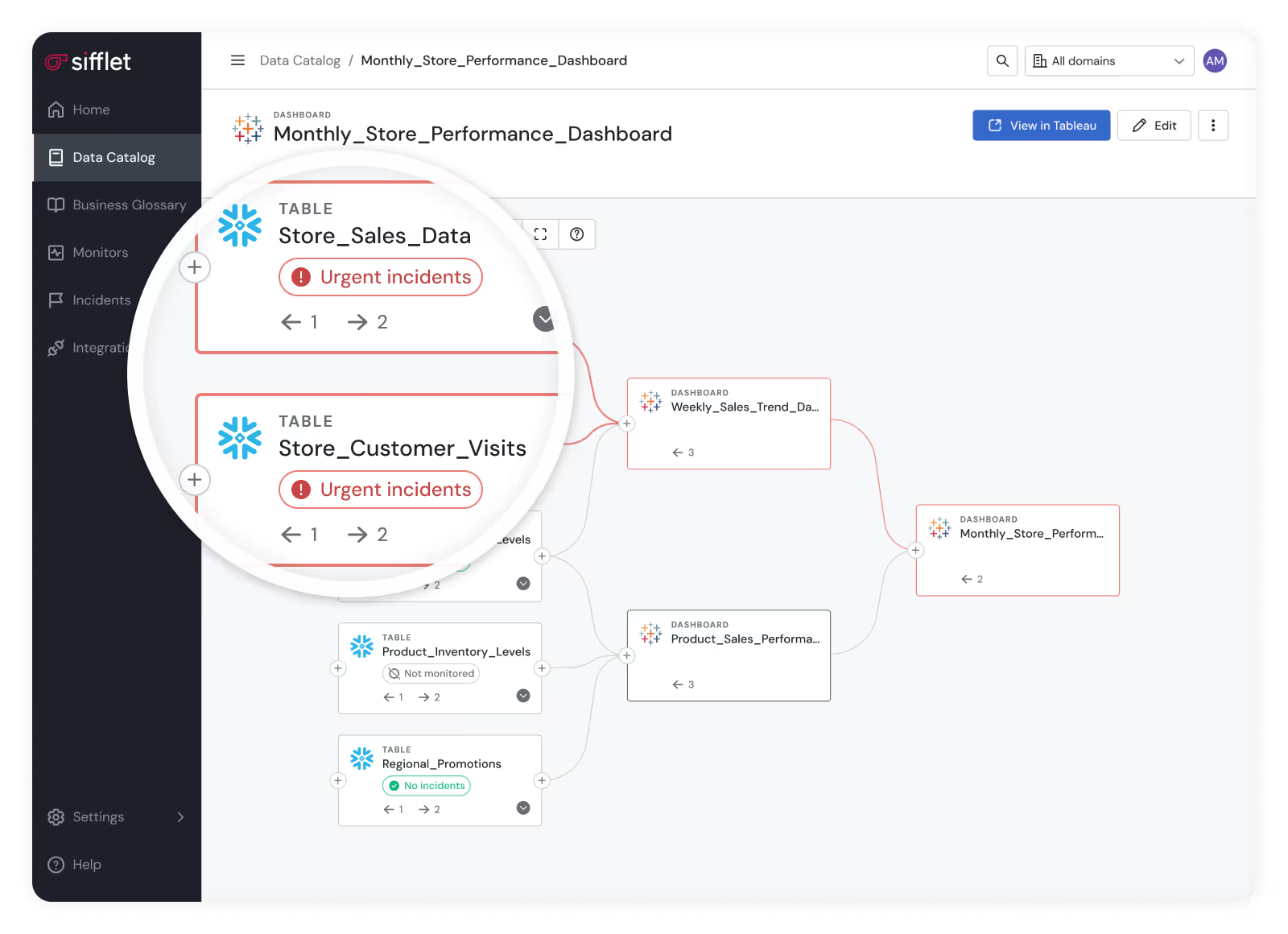

Understand Your Data, Inside and Out

Give data analysts and business users ultimate clarity. Sifflet helps teams understand their data across its whole lifecycle, and gives full context like business definitions, known limitations, and update frequencies, so everyone works from the same assumptions.

- Create transparency by helping users understand data pipelines, so they always know where data comes from and how it’s transformed.

- Develop shared understanding in data that prevents misinterpretation and builds confidence in analytics outputs.

- Quickly assess which downstream reports and dashboards are affected

Still have a question in mind ?

Contact Us

Frequently asked questions

Why is data quality so critical for businesses today?

Great question! Data quality is essential because it directly influences decision-making, customer satisfaction, and operational efficiency. Poor data quality can lead to faulty insights, wasted resources, and even reputational damage. That's why many teams are turning to data observability platforms to ensure their data is accurate, complete, and trustworthy across the entire pipeline.

Can I use data monitoring and data observability together?

Absolutely! In fact, data monitoring is often a key feature within a broader data observability solution. At Sifflet, we combine traditional monitoring with advanced capabilities like data profiling, pipeline health dashboards, and data drift detection so you get both alerts and insights in one place.

How can poor data distribution impact machine learning models?

When data distribution shifts unexpectedly, it can throw off the assumptions your ML models are trained on. For example, if a new payment processor causes 70% of transactions to fall under $5, a fraud detection model might start flagging legitimate behavior as suspicious. That's why real-time metrics and anomaly detection are so crucial for ML model monitoring within a good data observability framework.

How does Sifflet stand out among other data observability tools?

Sifflet takes a unique approach by addressing data reliability as both an engineering and business challenge. Our observability platform offers end-to-end coverage, business context, and a collaboration layer that aligns technical teams with strategic outcomes, making it easier to maintain analytics and AI-ready data.

How does data observability improve the value of a data catalog?

Data observability enhances a data catalog by adding continuous monitoring, data lineage tracking, and real-time alerts. This means organizations can not only find their data but also trust its accuracy, freshness, and consistency. By integrating observability tools, a catalog becomes part of a dynamic system that supports SLA compliance and proactive data governance.

Why are data teams moving away from Monte Carlo to newer observability tools?

Many teams are looking for more flexible and cost-efficient observability tools that offer better business user access and faster implementation. Monte Carlo, while a pioneer, has become known for its high costs, limited customization, and lack of business context in alerts. Newer platforms like Sifflet and Metaplane focus on real-time metrics, cross-functional collaboration, and easier setup, making them more appealing for modern data teams.

How does data observability fit into a modern data platform?

Data observability is a critical layer of a modern data platform. It helps monitor pipeline health, detect anomalies, and ensure data quality across your stack. With observability tools like Sifflet, teams can catch issues early, perform root cause analysis, and maintain trust in their analytics and reporting.

Is this feature scalable for large datasets and multiple data assets?

Yes, it is! With Sifflet’s auto-coverage and observability tools, you can monitor distribution deviation at scale with just a few clicks. Whether you're working with batch data observability or streaming data monitoring, Sifflet has you covered with automated, scalable insights.

-p-500.png)