Coverage without compromise.

Grow monitoring coverage intelligently as your stack scales and do more with less resources thanks to tooling that reduces maintenance burden, improves signal-to-noise, and helps you understand impact across interconnected systems.

Don’t Let Scale Stop You

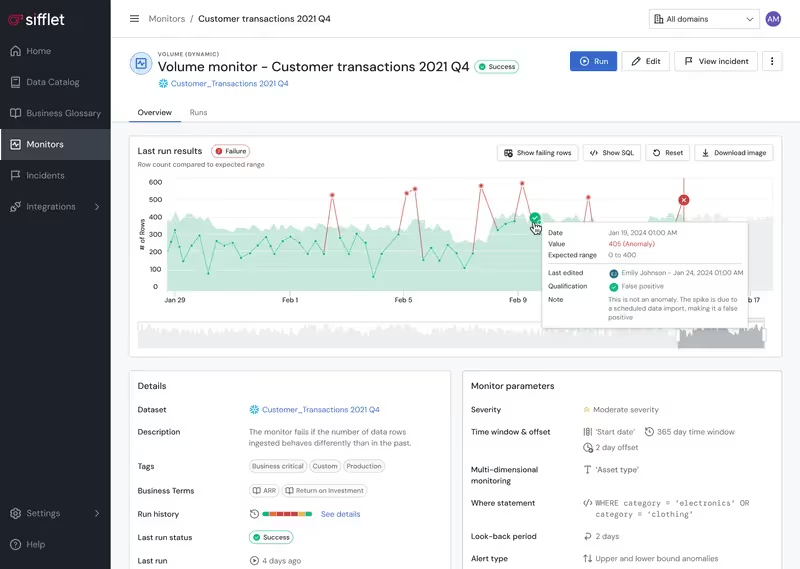

As your stack and data assets scale, so do monitors. Keeping rules updated becomes a full-time job, and tribal knowledge about monitors gets scattered, so teams struggle to sunset obsolete monitors while adding new ones. No more with Sifflet.

- Optimize monitoring coverage and minimize noise levels with AI-powered suggestions and supervision that adapt dynamically

- Implement programmatic monitoring set up and maintenance with Data Quality as Code (DQaC)

- Automated monitor creation and updates based on data changes

- Centralized monitor management reduces maintenance overhead

Get Clear and Consistent

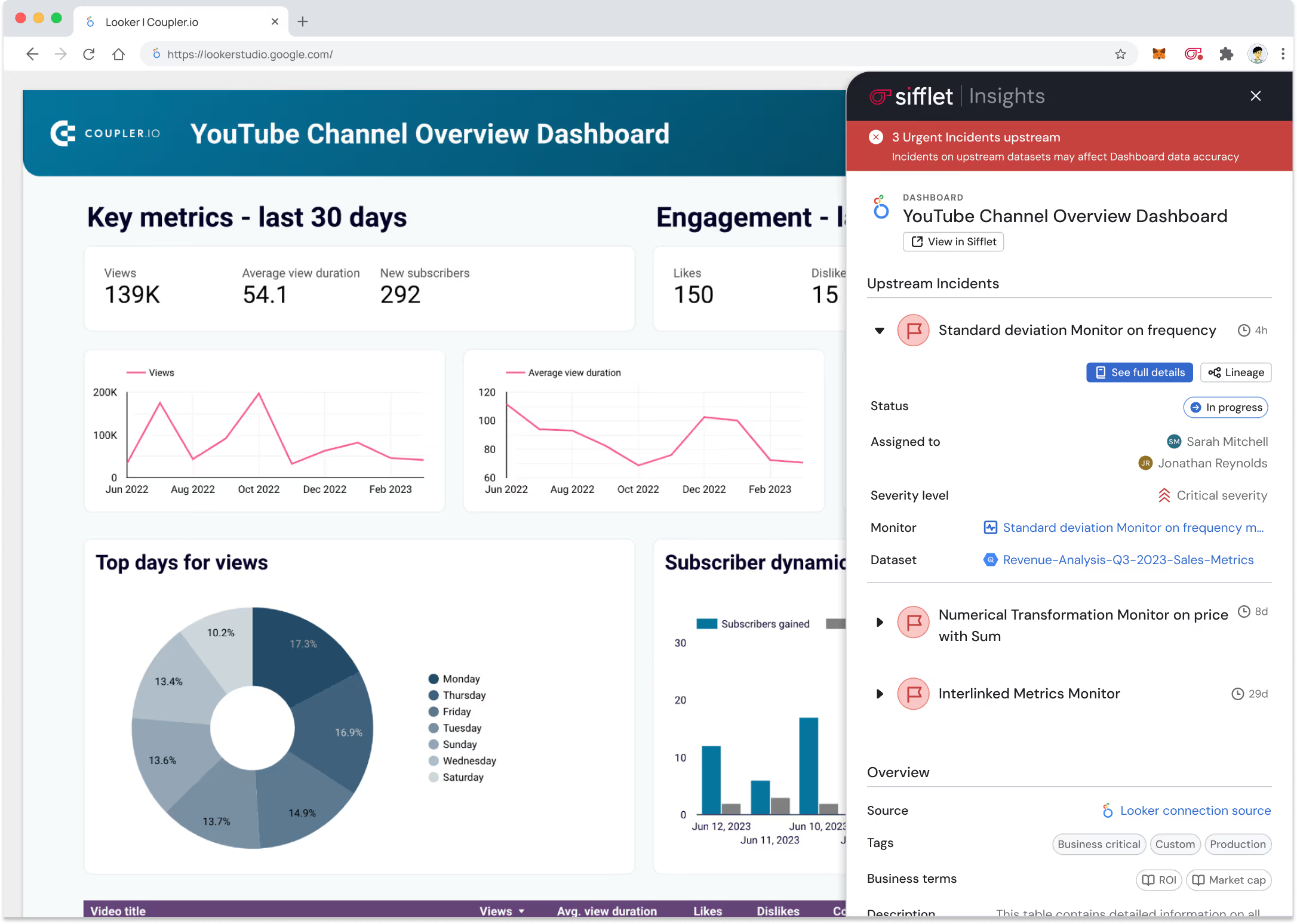

Maintaining consistent monitoring practices across tools, platforms, and internal teams that work across different parts of the stack isn’t easy. Sifflet makes it a breeze.

- Set up consistent alerting and response workflows

- Benefit from unified monitoring across your platforms and tools

- Use automated dependency mapping to show system relationships and benefit from end-to-end visibility across the entire data pipeline

Still have a question in mind ?

Contact Us

Frequently asked questions

What makes Sifflet's architecture unique for secure data pipeline monitoring?

Sifflet uses a cell-based architecture that isolates each customer’s instance and database. This ensures that even under heavy usage or a potential breach, your data pipeline monitoring remains secure, reliable, and unaffected by other customers’ activities.

How does data observability complement a data catalog?

While a data catalog helps you find and understand your data, data observability ensures that the data you find is actually reliable. Observability tools like Sifflet monitor the health of your data pipelines in real time, using features like data freshness checks, anomaly detection, and data quality monitoring. Together, they give you both visibility and trust in your data.

What makes Sifflet stand out among the best data observability tools in 2025?

Great question! Sifflet shines because it treats data observability as both an engineering and a business challenge. Our platform offers full end-to-end coverage, strong business context, and a collaboration layer that helps teams resolve issues faster. Plus, with enterprise-grade security and scalability, Sifflet is built to grow with your data needs.

What made data observability such a hot topic in 2021?

Great question! Data observability really took off in 2021 because it became clear that reliable data is critical for driving business decisions. As data pipelines became more complex, teams needed better ways to monitor data quality, freshness, and lineage. That’s where data observability platforms came in, helping companies ensure trust in their data by making it fully observable end-to-end.

What role does real-time data play in modern analytics pipelines?

Real-time data is becoming a game-changer for analytics, especially in use cases like fraud detection and personalized recommendations. Streaming data monitoring and real-time metrics collection are essential to harness this data effectively, ensuring that insights are both timely and actionable.

Which industries or use cases benefit most from Sifflet's observability tools?

Our observability tools are designed to support a wide range of industries, from retail and finance to tech and logistics. Whether you're monitoring streaming data in real time or ensuring data freshness in batch pipelines, Sifflet helps teams maintain high data quality and meet SLA compliance goals.

Can better design really improve data reliability and efficiency?

Absolutely. A well-designed observability platform not only looks good but also enhances user efficiency and reduces errors. By streamlining workflows for tasks like root cause analysis and data drift detection, Sifflet helps teams maintain high data reliability while saving time and reducing cognitive load.

How is data freshness different from latency or timeliness?

Great question! While these terms are often used interchangeably, they each mean something different. Data freshness is about how up-to-date your data is. Latency measures the delay from data generation to availability, and timeliness refers to whether that data arrives within expected time windows. Understanding these differences is key to effective data pipeline monitoring and SLA compliance.

-p-500.png)